This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Vyhledávání

Goliath Cluster

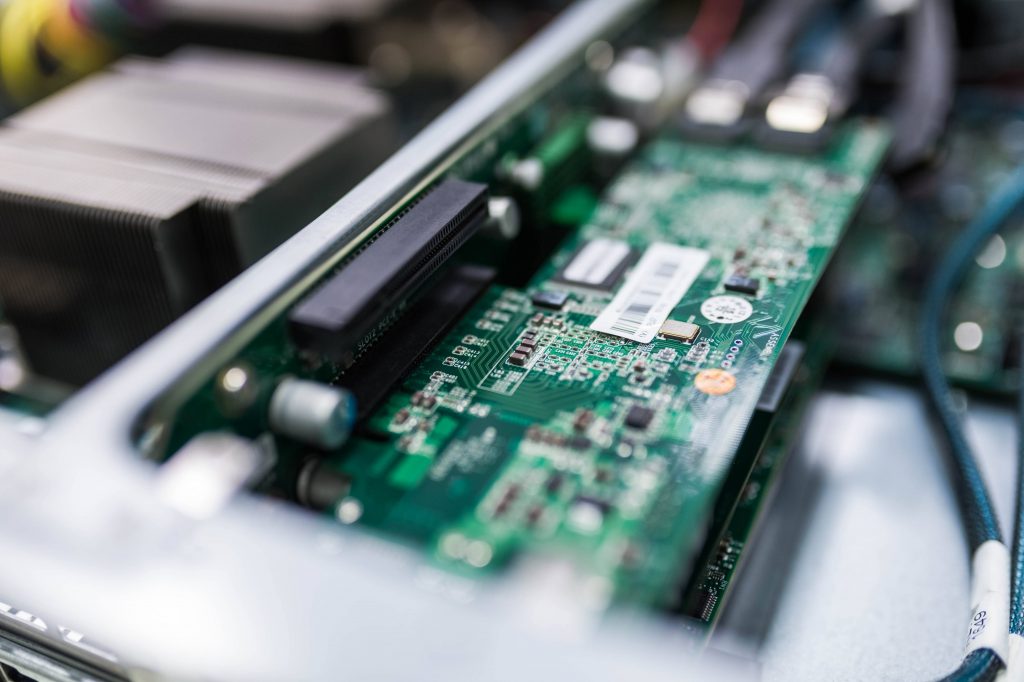

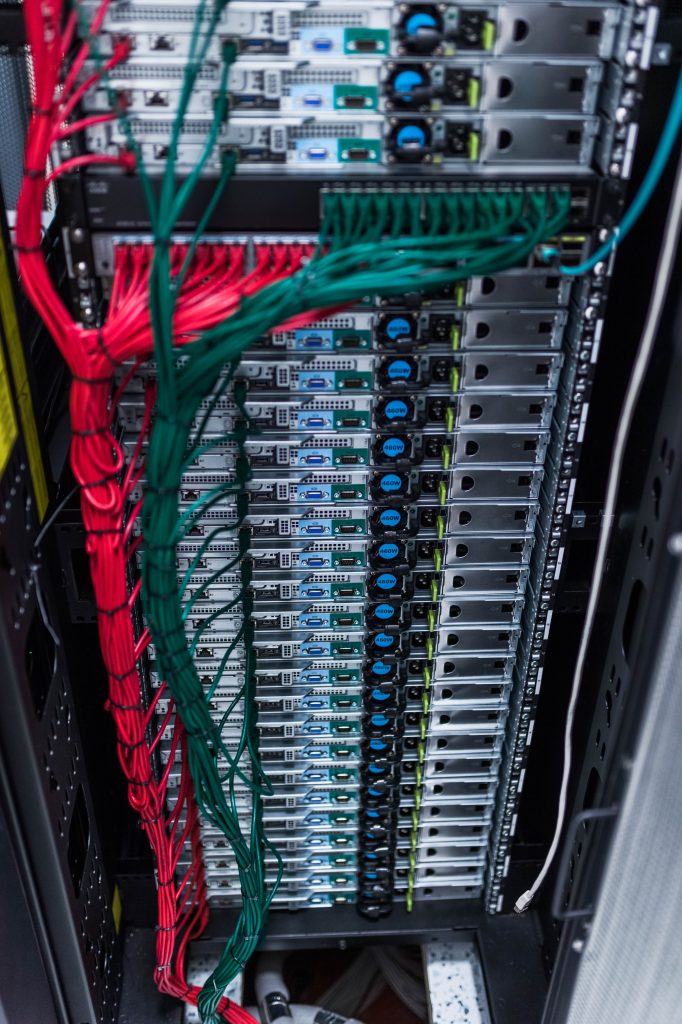

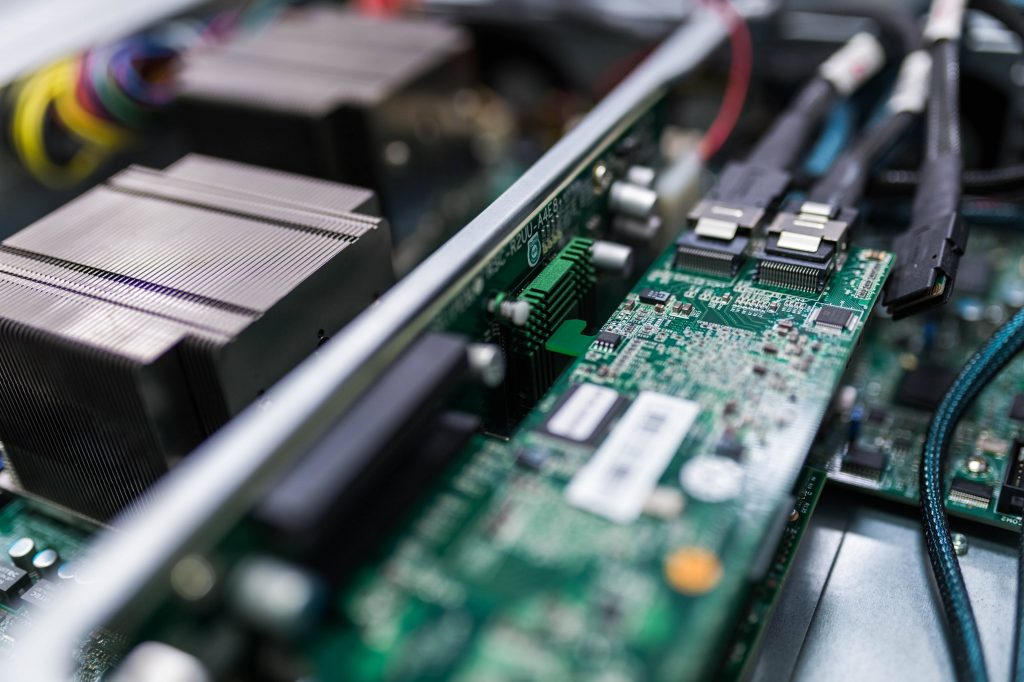

Goliath Cluster uses an HTCondor batch-processing system for distributing tasks to individual computational servers. HTCondor servers receive tasks from both local and grid users and distribute them to working nods. The working nods are divided into subclusters according to the type of their hardware. Minis, Lilium, Mahagon, Mikan, Magic, Aplex, Malva, Ib and Ibis. More >>

The Computing Centre of the FZU ensures the operation of up to several computer clusters and disk servers, which are connected within national and international grid projects.

The largest cluster called Goliath was providing approx. 10000 logical (5000 real) computational cores at the beginning of 2021. The cluster has been adding new servers gradually, therefore it contains newer and older CPU versions from Intel and AMD. Together with disc servers with the total capacity of over 5 PB (January 2021), it is participating in an international EGI grid within the WLCG project (distributed data processing for experiments on the LHC accelerator) as well as in an OpenScience grid (primarily intended for projects in the US). Due to a cooperation with national eINFRA e-Infrastructure, it has an excellent external connection (100 Gbps to private network of the LHC project, and 40 Gbps to the Internet).

We administer all servers using Puppet, we store any changes in Git. An extensive local monitoring is assured by Nagios and Prometheus, the visualization by munin a Grafana. The distribution of tasks to servers is ensured by the HTCondor batch-processing system. Usage statistics are published on the EGI portal. The calculation capacity is expanded by adding a functional forwarding function of selected tasks to the national IT4I supercomputer centre and the expansion of the storage room is performed on CESNET resources.

Goliath Cluster

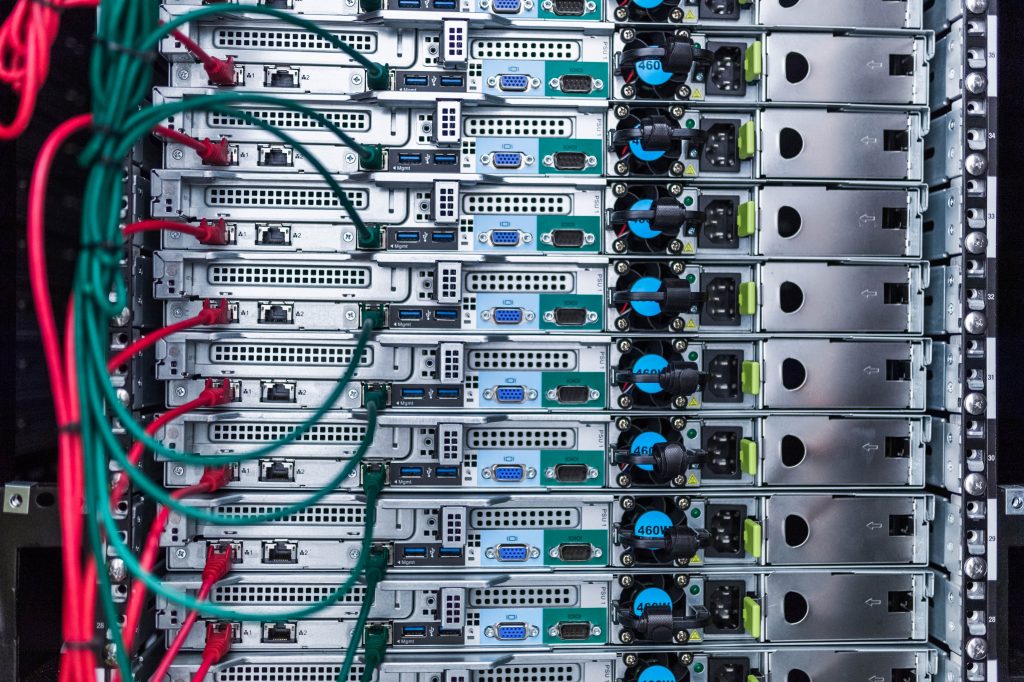

Lilium Subcluster

47 Supermicro 6017R-NTF 1U sized servers. Each server is fitted with two 8-core Intel Xeon E5-2650 v2, 2.6GHz, 96 GB RAM DDR3 processors, and two 500 GB discs. Power of 308 HS06 per server. The measured input power per server under maximum load is 288 W. It has been operational since the end of December 2020.

The total capacity of the subcluster: 14182 HS06 (1472 cores with HT on).

Mahagon subcluster

6 HPE Apollo 35 2U sized servers, each containing 4 servers; the total of 24 computational nods. Each server is fitted with two 16 core AMD EPYC 7301 type, 2.2GHz, 128 GB RAM DDR4 processors, and 1.92 TB SSD Intel S4510. Power 736 HS06 per server, with HT on. The measured input power of the entire subcluster at maximum load is 8.7 kW. Operational since May 2019.

The total capacity of the subcluster: 17680 HS06 (1536 cores with HT on).

Mikan Subcluster

51 Huawei RH1288 V3 servers. Each server is fitted with two 12 core Intel Xeon E5-2650 v4, 2.2GHz, 128 GB RAM DDR4 processors, and two 600 GB SAS disks. Power 511 HS06 per server, HT on. The measured input power per server 342 W at maximum load, of the entire subcluster 17.5 kW. Subcluster was acquired within the VVV OP project funding: CERNC, AUGER.CZ and Fermilab – CZ. Operational since November 2017.

The total capacity of the subcluster: 26040 HS06 (2448 cores with HT on).

Magic Subcluster

9 Intel 2U sized Twin servers, in each 4 servers of the Intel Compute Module HNS2600KP type; in total 36 computational nods. Each nod is fitted with two 10 core Intel Xeon E5-2630 v4, 2.2GHz, 128 GB RAM DDR4 processors, and two 600 GB SAS discs. Power 417 HS06 per server, with HT on. The measured input power of the entire subcluster at maximum load is 10.4 kW. Operational since April 2017.

The total capacity of the subcluster: 15001 HS06 (1440 cores with HT on).

Aplex Subcluster

32 ASUS servers of half depth, location in iDataPlex cabinet; they replaced a part of the discarded iberis machines from 2008. 30 servers in configuration: 2x Intel Xeon Xeon E5-2630-v3 (Haswell) 2.4 GHz 8c, 64 GB RAM DDR4, 2x SATA HDD 500 GB, 338 power HS06 per server. 2 servers are fitted with Xeon E5-2695-v3 processors with 14 cores each, 2.3 GHz, 128 GB RAM DDR4, disks identical, power 595 HS06 per server. The measured electrical input power per server 220 W at maximum load, of the entire subcluster 7 kW. Operational since December 2014.

The total capacity of the subcluster: 11360 HS06 (1072 cores with HT on).

Malva Subcluster

12 twin servers (in 6 1U sized chassis each) Supermicro with Intel Xeon E5-2650 v2 95W 2.6GHz processors. Each server is fitted with 2 CPU, 64 GB RAM and 2 SATA discs (500 GB, 10k rpm). Power 352 HS06 per server. Processes up to 384 tasks at once (HT on). The consumption per server at full load is 350 W. Fully operational since December 2013.

The total capacity of the subcluster: 4224 HS06 (384 cores with HT on).

Ib subcluster

Supply contract to fill in free positions in iDataPlex, operational since January 2011). 26 servers dx360 M3, each with 2 CPU Intel Xeon X5650 (6 cores per processor), 48 GB RAM, 2xHDD SAS 300 GB. HyperThreading on. Consumption at full load 284 W, power 204 HS06 per server.

The total capacity of the subcluster: 5304 HS06 (312 physical cores, 624 cores with HT on).

Ibis subcluster

The other system iDataPlex, delivered a year later (operated since January 2010). Contains 65 servers dx360 M2, each with 2 CPU Intel Xeon E5520 (4 cores per processor), 32 GB RAM, 1xHDD SAS 450 GB. HyperThreading on. Consumption at full load 256 W, power 118 HS06 per server.

The total capacity of the subcluster: 7670 HS06 (520 physical cores, 1040 cores with HT on).

The NCK for MATCA is supported by the

The NCK for MATCA is supported by the